So you just unboxed, racked, and deployed a new Nutanix cluster, and your boss comes to you and asks how we know it’s ready to handle workloads. I mean, you can be confident in saying that it’s ready, but what if you can provide them with results that show not only can we meet resiliency requirements but also handle the performance required of any workloads?

In the world of infrastructure, you don’t want to wait until something breaks to know if your platform is resilient. You also need to be confident that your systems can handle the workload they’re designed for. Enter Nutanix X-Ray—a powerful, automated testing tool that helps you validate the performance and resilience of your Nutanix platform before disaster strikes.

What is Nutanix X-Ray?

Nutanix X-Ray is designed to simulate real-world scenarios to stress test your environment, from storage to compute, and validate your system is functioning optimally. Whether you’re preparing for a major deployment, undergoing an upgrade, or want peace of mind, X-Ray puts your platform through its paces without any of the drama.

How Nutanix X-Ray Approaches Testing

X-Ray tests Nutanix environments using pre-defined scenarios focusing on the most critical aspects of a platform’s operation: resiliency, performance, and availability. Each test scenario is designed to mimic real-world operational issues or high-stress periods.

Requirements for Running Nutanix X-Ray

Before you dive into running tests with Nutanix X-Ray, it’s important to understand the system requirements and environment setup needed to use the tool effectively. Here are the key requirements:

1. Nutanix Cluster Compatibility

- X-Ray requires a Nutanix cluster running a supported version of Nutanix AOS (Acropolis Operating System). The cluster should have enough resources to handle simulated workloads and failures during the testing process.

- Supported hypervisors include Nutanix AHV or VMware ESXi. Make sure that the hypervisor version running on your cluster is compatible with X-Ray.

2. Dedicated Hardware Requirements

- Nodes: To ensure that X-Ray doesn’t interfere with production workloads, it’s recommended to run X-Ray on a dedicated test environment or a subset of nodes that can be isolated from critical workloads.

- Storage: X-Ray will stress the storage subsystem during tests, so adequate storage capacity and performance are required. SSDs are preferred for high-performance testing.

- Networking: Ensure networking components (NICs, switches, and VLANs) are correctly configured to avoid bottlenecks during network performance testing.

Due to the type of testing that X-RAY will be doing, it’s recommended not to have it deployed within the same cluster you’ll be running your tests. Makes sense right, if you’re doing impactful tests such as availability tests where nodes will be powered off, it could be very impactful to the test. The Nutanix caution notes say it pretty well!

3. X-Ray Installation Requirements

X-Ray is deployed as a virtual machine within a Nutanix cluster. The VM acts as the controller, orchestrating tests, collecting data, and generating reports. Additional workload VMs will be spun up and down dynamically to support the workload tests. The specs on these VMs will vary depending on the tests being run.

- vCPUs: A minimum of 4 vCPUs is recommended for the XRAY VM.

- Memory: Allocate at least 16 GB of RAM for the XRAY VM.

- Storage: The X-Ray VM requires approximately 100 GB of storage for logs, scenarios, and performance results.

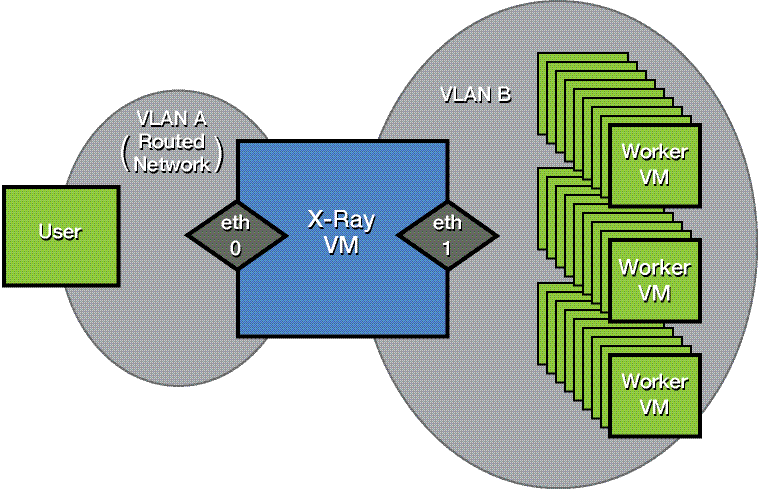

Networking is important when it comes to an X-Ray deployment. I generally use the Zero-Configuration network option to ease the deployment. Give the X-Ray VM a second NIC, and map the workload VMs to that subnet.

4. Access Permissions

- Admin-level access: Ensure that the X-Ray VM has administrator-level permissions on the Nutanix cluster and access to manage the hypervisor (either AHV or ESXi). This allows X-Ray to simulate failures like node crashes, disk failures, and network partitions.

- API Access: X-Ray leverages the Nutanix Prism Central API for collecting metrics and orchestrating tests, so API access should be enabled and configured.

5. Additional Configurations

- IPMI (OOB) Connectivity: For some of the tests, you will want to add in the OOB Management Protocol from the target cluster, to enable the power on/power off actions of some of the tests.

Testing Scenarios and Workloads

X-Ray comes with pre-built scenarios that are ready to use, but you also have the flexibility to create custom scenarios that better align with your specific environment and workloads. This requires familiarity with the workloads you want to simulate, so you can configure X-Ray to match those conditions.

Some testing scenarios, like performance validation, will need an understanding of the applications and workloads (e.g., IOPS, data transfer patterns) that are critical to your environment. If you are replicating a production workload, try to match the workload profile as closely as possible.

I ran a sample workload test against my lab cluster, which is based on much older G5 hardware, but still kicking! The test I ran was the HCI Benchmark test, which provides the following:

This test allows you to run customized storage workloads on one or more VMs. You can adjust common storage microbenchmark parameters, including the working set size, block size, and the target I/O rate. Workloads are evenly distributed across every disk on every VM. Setting the target I/O rate to 0 performs a max throughput test. Higher IOPS and lower latency indicate better performance.During this test, which runs for about 15-20 minutes including cleanup, the following happens:

Setup

- Deploy the desired number of workload VMs per host.

- Fill virtual disks with the desired amount of random data.

Measurement

- Run the desired workload configuration for the requested amount of time across each VM.

After completion, you can easily see the test results performed by X-Ray in both Summary and Detailed Graphs, which provide both high-level and detailed data about the test and the performance.

There are many tests available to validate the resiliency and performance of the Cluster. Many times on deployments I run several tests to ensure I’m getting the performance that I expect from both the HCI infrastructure, Network performance and even Application performance (using HammerDB benchmark tests), but also to show the customer the resiliency of the platform as it relates to power loss, rolling upgrades and drive failure.

Wrap Up

While I didn’t dive deep into X-Ray and all of the tests, I wanted to show how using X-Ray’s robust testing and validation capabilities helps administrators assess resiliency and performance in their Nutanix clusters. Here’s a quick breakdown of its value:

- Simulates Real-World Scenarios: X-Ray runs various tests that mirror common failure scenarios, such as node or disk failures. This lets administrators observe how the cluster recovers and maintains service, ensuring real-world reliability.

- Automated Testing Framework: It’s designed to be easy to set up, requiring minimal configuration to run complex tests. This automation makes running tests simple and repeatable across clusters or different configurations.

- Customizable Benchmarks: X-Ray allows administrators to fine-tune performance tests to fit their environment’s needs. This allows customers to understand what the platform is capable of, by showing real-world performance trends.

- Clear, Actionable Insights: X-Ray provides detailed, easy-to-read reports, allowing teams to pinpoint performance bottlenecks or weaknesses in the configuration, so they can adjust proactively.

- Comparison to Industry Standards: X-Ray also lets you compare your cluster’s performance to Nutanix’s baseline metrics or industry standards, helping demonstrate the infrastructure’s value and ensuring you’re getting the most out of your hardware.

In essence, Nutanix X-Ray offers a proactive way to verify a cluster’s readiness and durability under stress, assuring stakeholders of high-performance and resilient operations.

If you’ve got a Nutanix cluster deployed, did you run X-Ray before releasing it to production?